Table of Contents

Authors

The list of book contributors is presented below.

Tallinn University of Technology

- Rahul Razdan, Ph.D

- Mohsen Malayjerdi, Ph.D

Silesian University of Technology

- Roman Czyba, Ph. D., DSc., Eng.

- Piotr Czekalski, Ph. D., Eng.

- Tomasz Grzejszczak, Ph. D., Eng.

Riga Technical University

- Agris Nikitenko, Ph. D., Eng.

- Karlis Berkolds, M. sc., Eng.

- Larisa Survilo, M. sc., Eng.

ITT Group

- Raivo Sell, Ph. D., ING-PAED IGIP

ProDron

- Tomasz Siwy, CEO

Czech Technical University

- Ing. Libor Přeučil, CSc. (Ing. = Master of Engineering, CSc. = Ph. D.)

- Ing. Karel Košnar, Ph.D. (Ing. = Master of Engineering)

Technical Editor

- Raivo Sell, Ph. D., ING-PAED IGIP

External Contributors

Reviewers

Project Information

This content was implemented under the project: SafeAV - Harmonizations of Autonomous Vehicle Safety Validation and Verification for Higher Education.

Project number: 2024-1-EE01-KA220-HED-000245441.

Consortium

- ITT Group, Tallinn, Estonia (Coordinator).

- Silesian University of Technology, Gliwice, Poland,

- Riga Technical University, Riga, Latvia,

- Czech Technical University in Prague, Prague, Czech Republic

- Tallinn University of Technology, Tallinn, Estonia

- Prodron, Gliwice, Poland

Erasmus+ Disclaimer

This project has been funded with support from the European Commission.

This publication reflects the views only of the author, and the Commission cannot be held responsible for any use which may be made of the information contained therein.

Copyright Notice

This content was created by the SafeAV Consortium 2024–2027.

The content is copyrighted and distributed under CC BY-NC Creative Commons Licence and is free for non-commercial use.

Introduction

[raivo]Please fill in some introduction

Content classification hints

The book comprises a comprehensive guide for a variety of education levels. A brief classification of the contents regarding target groups may help in a selective reading of the book and ease finding the correct chapters for the desired education level. To inform a reader about the proposed target group, icons are assigned to the top headers of the chapters. The list of icons and their reflection on the target groups is presented in the table 1.

Autonomous Systems

[rczyba][✓ rczyba, 2025-09-18]

Autonomous systems are systems capable of operating independently, making decisions and adapting to a changing environment without direct human intervention. This means that they can collect information about the environment, process it and, based on it, perform tasks in accordance with programmed goals, for a longer period of time and without the need for constant supervision. A key feature of autonomous systems is their ability to self-regulate and operate independently of external control. Autonomous systems use sensors (e.g. cameras, radars, ultrasonic sensors) to collect information about the environment. The collected data are processed, and decisions regarding further action are made on their basis. What exactly is autonomy? The autonomy of a system can be defined as its ability to act according to its own goals, norms, internal states, and knowledge, without external human intervention. This means that autonomous systems are not limited to robots or unmanned vehicles, well known as drones. This definition actually includes any automatic functions that can reduce the level of workload or support the person driving the vehicle.

Autonomous systems are those that make decisions and operate without human intervention based on data and predetermined rules. Such solutions use advanced technologies such as artificial intelligence, machine learning, neural networks, Internet of Things, and others to perform tasks independently. Autonomous systems are today's Industry 4.0 and are used in various areas, from robotics, through transport and logistics, to medicine and education. An example would be an autonomous car that makes decisions on its own based on data from sensors, or an autonomous transport vehicle (AGV, or Automated Guided Vehicles) designed to safely and efficiently transport loads in a warehouse, without the need for operator supervision. Another application of autonomous systems are production systems that, based on data from industrial sensors, automatically control production processes, control machines and optimize production. This allows for shortening production times, reducing production costs and increasing product quality. Autonomous systems are also used in transport and logistics, where they enable faster and more efficient delivery of goods. Thanks to the Internet of Things and monitoring systems, every stage of transport can be tracked, from loading to delivery, which allows for better control of the process. Autonomous systems are also used in medicine, where they act as a doctor's advisor and enable faster and more precise diagnosis of diseases, as well as more effective treatment of patients. These systems are also used in education, where they allow the adaptation of the teaching process to the individual needs of students and improvement of the teaching process.

Autonomous systems are becoming an increasingly important part of our lives, and their development and application will have an increasing impact on the future. This study focuses on autonomous control of unmanned ground, aerial, and marine vehicles.

Follow those subchapters for more content:

Autonomous Vehicles

[rczyba][✓ rczyba, 2025-10-22]

Autonomous vehicles, also known as self-driving vehicles, are platforms that can move and make decisions without direct human intervention. They use advanced technologies such as artificial intelligence, sensors, cameras, and navigation systems to analyze their surroundings and make decisions in real time. Autonomy of mobile vehicles means the ability to independently determine their own control signals. In the case of mobile platforms, this primarily involves the ability to plan a route to a destination. In order to make these decisions as correct and optimal as possible, it is crucial to have a good understanding of the environment surrounding the vehicle, which in practice is often unknown and unpredictable. Autonomous vehicles have the potential to increase road safety, reduce traffic jams, and improve the availability of transport for people who cannot drive. In recent years, many companies, including Tesla, Waymo, and Uber, have been investing in the development of these technologies, leading to intensive research and testing on public roads. As technology advances, autonomous vehicles may become more and more common in our daily lives.

Unmanned vehicles, both ground, aerial, water and underwater, are versatile structures used in various fields, from monitoring areas to search and rescue operations (SAR). Thanks to the development of a wide range of high-precision sensors and algorithms for estimating movement parameters, unmanned vehicles have the ability to localize, detect objects and avoid obstacles. New technologies are gaining importance, especially in the context of the development of vehicle autonomy. Autonomous navigation of unmanned vehicles is proving to be a significant challenge. This is a complex and dynamically developing area of technology that has the potential to revolutionize many industries and applications.

The growing importance of autonomy in various sectors is a clear and visible phenomenon. This is not surprising considering the advantages of these systems. Platforms with a high level of autonomy can operate in environments with poor or limited communication capabilities, significantly reducing their dependence on remote control stations. This capability, along with a greater operational range, is one of the key attributes for mission execution. As autonomy levels increase, there will be a shift from the classic individual remote control operational model to the swarm concept, in which a single pilot/operator controls more than one vehicle simultaneously.

Global transport has been undergoing dynamic transformation in recent years. Key trends shaping it include mobility, multimodality, sharing, ecology, and autonomy. The aging population and the need for multitasking will also have a significant impact on transport in the coming years, providing additional impetus for the development of autonomous means of transport. Autonomous transport is no longer a futuristic vision, but a reality that surrounds us. Automated metro systems, shuttle buses, and drones are all examples of the use of autonomous devices to transport people and goods. For now, these are niche solutions, but with further technological development, we can expect the autonomy of other modes of transport, including the most popular road passenger transport. The acceleration in the development of autonomous transport systems is linked to the rapid development of machine learning techniques in recent years. Increased computing power, combined with increasingly affordable cameras and sensors, has enabled the emergence of self-learning artificial intelligence systems capable of analyzing road conditions and making decisions, including those related to vehicle control. As technology advances, investments in autonomous vehicles are increasing. Although the largest companies have yet to create a finished product or generate a profit, investors are hopeful of an autonomous road revolution in the near future. Level 2 vehicles (advanced driver assistance systems) are already available for sale, while work on fully autonomous vehicles is underway. In November 2019, the first driverless taxi service was launched in Phoenix, USA (in a limited area and for a limited number of customers). In Poland, the most intensive progress in autonomous transport involves drones. In 2018, the Central European Drone Demonstrator was established in the Upper Silesian-Zagłębie Metropolis, an initiative for the development of urban drone transport, including autonomous transport.

Autonomous transport relies on sensors that analyze the surroundings. Sensors in autonomous vehicles constitute a large percentage of the device's production cost, making these vehicles expensive. Vehicle sensors also generate a huge data stream, the analysis of which requires significant computing power, thus increasing energy consumption. Autonomous vehicle traffic relies on continuous vehicle-to-vehicle and vehicle-to-infrastructure communication. Recent years have been characterized by intense competition between two vehicle-to-vehicle communication systems: ITS-G5 (based on the Wi-Fi standard) and C-V2X (based on 4G or 5G mobile networks). The development of autonomous road transport will necessitate equipping road infrastructure with appropriate sensors to coordinate vehicle traffic. The development of 5G technology offers a significant opportunity to improve V2I (Vehicle to Infrastructure) technology. In the field of drones, miniaturized ADS-B aviation technology, successfully utilizing LTE, has proven highly effective. A major challenge related to the development of autonomous transport will be the emphasis on defending against cyber threats. The possibility of remotely taking control of a vehicle, data theft, cyberespionage, and viruses that confuse sensors – these are just some of the threats modern society must face. Another challenge will be creating appropriate regulations that will regulate liability for potential damages, as well as the rules for sharing transport infrastructure between different types of vehicles. Such regulations must respond to public expectations and concerns. Adapting the infrastructure to the needs of autonomous vehicles will be a challenge and a cost, including equipping it with appropriate sensors and perhaps even redesigning it more broadly. While it is not yet clear what scope of transport will be automated, the gradual introduction of autonomous solutions to road, rail, maritime, and air transport is certain.

Due to the specific requirements of the operational environment, autonomous land, air and sea vehicles will be discussed separately in the following subsections.

Ground, Aerial, and Marine Vehicle Architectures

Introduction

Over the past two decades, the rapid evolution of digital technologies has transformed the design, deployment, and operation of autonomous systems. The advancements in artificial intelligence (AI), robotics, and advanced sensors have driven the emergence of intelligent platforms, which, depending on their application domain and specifics, are capable of operating with limited or no human intervention. This transformation spans across ground, aerial, and marine environments — each presenting distinct challenges yet sharing a common architectural foundation centred on perception, decision-making, and control [1,2]. Currently, systems are no longer perceived and designed as isolated machines but integral parts of a broader digital ecosystem involving cloud computing, edge processing, and distributed intelligence [3]. The ongoing Industry 4.0 and emerging Industry 5.0 initiatives emphasise the fusion of human–machine collaboration, sustainability, and adaptability, all of which depend heavily on robust and modular system architectures. A key enabler of this transformation is the system’s architecture — the structured framework that defines how system components interact, communicate, and evolve. In autonomous systems, architecture governs how sensor data is interpreted, how decisions are made in uncertain environments, and how control actions are executed safely and reliably. For instance, in self-driving cars, architectural layers coordinate LiDAR, camera, and radar inputs to produce real-time navigation decisions; in drones, they manage flight stability and mission autonomy; and in underwater robots, they handle communication delays and localisation challenges [4,5]. Further, the architectures of the autonomous systems and the related topics are discussed in the following order:

General Concepts of Architecture for Autonomous Systems

Software architecture represents the high-level structure of a system, outlining the organisation of its components, their relationships, and the guiding principles governing their design and evolution. In autonomous systems, architecture defines how perception, planning, and control modules interact to achieve autonomy while maintaining safety, reliability, and performance [6]. The primary purpose of an autonomous system’s software architecture is to ensure:

- Scalability: The ability to integrate new sensors, algorithms, or mission modules.

- Interoperability: Compatibility with other systems and communication protocols.

- Maintainability: Ease of updating or modifying individual modules.

- Safety and fault tolerance: Robustness against sensor failure, communication loss, or software bugs.

- Real-time responsiveness: Capability to process environmental data and respond within strict temporal limits.

Architectural design in autonomous systems typically follows several universal principles:

- Modularity: Systems are divided into well-defined modules (e.g., perception, localisation, path planning, control), allowing independent development and testing. Enables functional isolation, expandability and higher maintainability of the given system.

- Abstraction: Functional details are hidden behind interfaces, promoting flexibility and reuse. In its essence, the higher the abstraction, the easier system development, testing and applications. It also reduces design complexity at every abstraction layer.

- Layering: Tasks are grouped by level of abstraction — for instance, hardware interfaces at the lowest level and mission planning at the highest. Besides the functional abstraction, layering enables different technical implementations at different levels, which is needed to address different reaction speeds, reduce decision-making delays and more effective internal communications and data processing at different levels.

- Standardisation: Adoption of middleware standards (e.g., ROS, DDS, MOOS) facilitates interoperability across platforms. Besides, the mentioned standardisation enables avoiding vendor locks and reduces overall costs due to increased competition.

- Data-centric communication: Modern architectures rely on publish/subscribe paradigms to manage distributed communication efficiently.

Middleware and Frameworks

Middleware serves as the backbone that connects diverse modules, ensuring efficient data exchange and synchronisation. Prominent middleware systems in autonomous vehicles include:

- ROS (Robot Operating System): An open-source framework providing a modular structure for robotic applications, including perception, planning, and control [7].

- DDS (Data Distribution Service): A real-time communication standard widely used in aerospace and defence systems, supporting deterministic data exchange [8].

- MOOS-IvP: A marine-oriented autonomy framework designed for mission planning and vehicle coordination in autonomous underwater and surface vehicles [9].

- AUTOSAR Adaptive Platform: A standard architecture for automotive systems emphasising safety, reliability, and scalability [10].

These middleware platforms not only promote interoperability but also enforce architectural patterns that ensure predictable performance across heterogeneous domains.

Most autonomous systems follow a hierarchical layered architecture:

| Layer | Function | Examples |

|---|---|---|

| Hardware Abstraction | Interface with sensors, actuators, and low-level control | Sensor drivers, motor controllers |

| Perception | Process raw sensor data into meaningful environment representations | Object detection, SLAM |

| Decision-Making / Planning | Generate paths or actions based on goals and constraints | Path planning, behavior trees |

| Control / Execution | Translate plans into commands for actuators | PID, MPC, low-level control loops |

| Communication / Coordination | Handle data sharing between systems or fleets | Vehicle-to-vehicle (V2V), swarm coordination |

Depending on functional tasks system’s architecture is split into multiple layers to abstract functionality and technical implementation as discussed above. Below is a schema of a generic architecture to get a better understanding of typical tasks at different layers.

The Role of AI and Machine Learning

Modern autonomous systems increasingly integrate machine learning (ML) techniques for perception and decision-making. Deep neural networks enable real-time object detection, semantic segmentation, and trajectory prediction [11]. However, these data-driven methods also introduce architectural challenges:

- Increased computational load requiring edge GPUs or dedicated AI accelerators.

- The need for robust validation and explainability to ensure safety.

- Integration with deterministic control modules in hybrid architectures.

Thus, many systems adopt hybrid designs, combining traditional rule-based or dynamics-based control with data-driven inference modules, balancing interpretability and adaptability

Typical Autonomous System Software Architecture

Autonomous systems, regardless of their physical domain — ground, aerial, or marine — share a common architectural logic that structures how data flows from sensors to decision-making and control units. This section explores the typical functional architecture and the operational pipeline that enable autonomous behaviour.

The Sense–Plan–Act Paradigm

The foundation of most autonomous systems lies in the Sense–Plan–Act (SPA) paradigm, introduced in early robotics and still relevant today. It slightly extends a general controllable system’s architecture by introducing explicit steps of sensing, decision making and acting. This model organises operations into three distinct but interdependent stages:

- Sense: Gather and interpret data from sensors to build an internal representation of the environment.

- Plan: Use that representation to decide on goals, trajectories, or actions.

- Act: Execute those actions through control and actuation mechanisms.

This conceptual model remains universal across domains and can be represented as follows.

The SPA cycle forms the basis for layered architectures in autonomous vehicles, where each layer refines or extends one part of this process. In practice, modern architectures also include learning and communication layers that enhance adaptability and collaboration between agents [13].

A simplified data-flow architecture illustrates how perception data transitions through layers to produce control actions via extending the SPA architecture to a more applicable one :

This closed-loop interaction ensures that systems continuously update their understanding of the world, adjusting behaviour based on environmental changes or feedback from control execution [14].

Distributed vs. Centralised Architectures

Architectural organisation also depends on whether processing is centralised or distributed:

- Centralised Architectures:

- All decision-making occurs on a single computing unit.

- Easier synchronisation but can become a bottleneck.

- Common in small-scale robots and drones.

- Distributed Architectures:

- Tasks are distributed among multiple computing nodes or agents.

- Enhanced scalability and fault tolerance.

- Essential for multi-vehicle coordination (swarm robotics, fleet operations).

Middleware frameworks like ROS 2 and DDS are inherently designed to support distributed computation, enabling decentralised data exchange in real time [15]).

Safety and Redundancy

Safety is a critical design consideration. Redundant architectures replicate essential components (e.g., dual sensors, parallel computing paths) to ensure operation even during failures. For example, aircraft autopilot systems employ triple-redundant processors and cross-monitoring logic [16]. Similarly, marine vehicles use redundant navigation sensors to counter GPS outages caused by water interference. Architectural safety mechanisms include:

- Failover controllers

- Health monitoring nodes

- Watchdog timers

- Self-diagnostics and logging subsystems

These ensure resilience, especially in mission-critical or human-in-the-loop systems.

Reference Architectures for Autonomous Systems

Reference architectures serve as standardised templates guiding the design of specific systems. They establish a common vocabulary, promote interoperability, and enable systematic validation.

ROS and ROS 2 Framework

Robot Operating System (ROS) provides a modular, publish–subscribe communication infrastructure widely adopted in research and industry. Its successor, ROS 2, adds real-time capabilities, security features, and DDS-based communication, making it suitable for production-grade autonomous systems [17]).

The ROS 2 architecture provides several advantages, including component-level independence from the provider, modularity enabling easier development as well as large community and libraries of packages for deployment. ROS 2 is now the backbone for major open-source projects such as Autoware.AI (autonomous driving) and PX4-Autopilot (UAV control).

AUTOSAR Adaptive Platform

In the automotive sector, the AUTOSAR (AUTomotive Open System ARchitecture) standard defines a scalable, service-oriented architecture supporting high-performance applications such as automated driving [18].

The AUTOSAR Adaptive Platform is conceptually a middleware. AUTOSAR Adaptive Platform provides services to Adaptive Applications beyond those available from the underlying operating system, drivers, and extensions. One of the distinctive features of the AUTOSTAR is its Real-time and safety-critical compliance (ISO 26262) as well as use of SOME/IP and DDS for service-based communication purposes. AUTOSAR is widely implemented in production autonomous vehicles from manufacturers like BMW, Volkswagen, and Toyota [19].

JAUS – Joint Architecture for Unmanned Systems

The JAUS (Joint Architecture for Unmanned Systems) is a U.S. Department of Defense standard (SAE AS5669A) defining a message-based, modular architecture for interoperability among unmanned systems [20]. It is domain-agnostic, supporting aerial, ground, and marine vehicles. JAUS defines:

- A component-based hierarchy (Subsystem → Node → Component → Service)

- Standardised message sets for communication

- Cross-domain interoperability

JAUS remains influential in defence and research projects where multiple unmanned vehicles must coordinate under a unified framework. Due to its straightforward and easy-to-implement architecture, it has been adopted by different systems and domains

MOOS-IvP Architecture for Marine Systems

MOOS (Mission Oriented Operating Suite) combined with IvP (Interval Programming) forms a robust architecture for marine autonomy, developed at MIT and used in NATO and U.S. Navy programs [21].

IvP (Interval Programming) Helm provides decision-making capabilities based on models provided by the developers, while MOOS DB provides access to data and decisions collected by Applications. Client applications (or just Applications are the main functionality, and modularity drives the ability to integrate different functions, logically isolating them and providing access through a common communications space. The communications are enabled using pMOOSBridge middleware. All of the mentioned allow an asynchronous behaviour-based response to a changing environment and higher flexibility, easier maintainability and to some extent future-proof solutions.

Comparative Summary of Reference Architectures

| Architecture | Domain | Key Features | Communication Model |

|---|---|---|---|

| ROS / ROS 2 | General / Research | Modular, open-source, community-driven | Publish–Subscribe (DDS) |

| AUTOSAR Adaptive | Automotive | Safety, real-time, standardised | Service-oriented (SOME/IP, DDS) |

| JAUS | Defence / Multi-domain | Interoperability, hierarchy-based | Message-based |

| MOOS-IvP | Marine | Behaviour-based, decentralised | Shared database model |

Recent trends combine multiple reference architectures to exploit their strengths. For example:

- ROS–AUTOSAR bridges enable integration between experimental and production-grade systems.

- DDS–MQTT hybrids connect real-time robotics with cloud-based IoT analytics.

- ROS–MOOS integrations allow cross-domain cooperation between underwater and surface robots [22].

Such hybridization reflects the growing need for flexibility and cross-domain interoperability in modern autonomous systems.

Application Domains – Aerial, Ground, and Marine Vehicle Architectures

Application Domains Overview

Autonomous systems operate across diverse environments that impose unique constraints on perception, communication, control, and safety. While all share a foundation in modular, layered architectures, the operational domain strongly influences how these layers are implemented [23,24]. Some of the most important challenges and differences are listed in the following table:

| Domain | Environmental Constraints | Architectural Challenges |

|---|---|---|

| Aerial | 3D motion, strict safety & stability, limited power | Real-time control, airspace coordination, fail-safes |

| Ground | Structured/unstructured terrain, interaction with humans. Complex localisation and mapping | Sensor fusion, dynamic path planning, V2X communication |

| Marine | Underwater acoustics, communication latency, and localisation drift | Navigation under low visibility, adaptive control, and energy management |

Aerial Vehicle Architectures

Aerial autonomous systems include Unmanned Aerial Vehicles (UAVs), drones, and autonomous aircraft. Their software architectures must ensure flight stability, real-time control, and safety compliance while supporting mission-level autonomy [25]. UAV architectures are often tightly coupled with flight control hardware, leading to a split architecture:

- Onboard system (real-time control and perception)

- Ground control system (mission management, supervision)

Some of the most popular architectures:

PX4 Autopilot An open-source flight control stack supporting multirotors, fixed-wing, and VTOL aircraft. The PX4 architecture is divided into Flight Stack (estimation, control) and Middleware Layer (uORB) for data communication [26]). The technical implementation of the architecture ensures compatibility with MAVLink communication and ROS 2 integration, making it a very popular and widely used solution.

ArduPilot In comparison, the ArduPilot is a Modular architecture with layers for HAL (Hardware Abstraction Layer), Vehicle-Specific Code, and Mission Control. The technical implementation are widely used by the community and used in research and commercial UAVs for mapping, surveillance, and logistics [27].

Still, some challenges remain:

- Safety and Redundancy: Flight-critical functions must survive component failures.

- Communication Constraints: Limited bandwidth and intermittent connectivity.

- Energy Efficiency: Trade-offs between payload weight and computational power.

- Airspace Regulation: Compliance with UAV Traffic Management (UTM) systems [28].

Ground Vehicle Architectures

Ground autonomous systems encompass self-driving cars, unmanned ground vehicles (UGVs), and delivery robots. Their architectures must manage complex interactions with dynamic environments, multi-sensor fusion, and strict safety requirements [29]. A ground vehicle’s software stack integrates high-level decision-making with low-level vehicle dynamics, ensuring compliance with ISO 26262 functional safety standards [30]. One of the reference architectures used is Autoware.AI (and its successor Autoware.Auto), which is an open-source reference architecture for autonomous driving built on ROS/ROS 2. It implements all functional modules required for L4 autonomy, including:

- Perception (object recognition, segmentation)

- Planning (route, behaviour, trajectory)

- Control (PID, MPC)

- Simulation and visualisation tools

Autoware emphasises modularity, allowing integration with hardware-in-the-loop (HIL) simulators and real vehicle platforms [31]). Currently, the automotive industry is using several standards to foster the development and practical implementations of future autonomous ground transport systems:

- AUTOSAR Adaptive Platform: Provides safety-certified, service-oriented design.

- ISO 26262: Functional safety standard ensuring risk assessment and hazard analysis.

- SAE J3016: Defines levels of driving automation (0–5).

- OpenDrive / OpenScenario: Data models for simulation and testing.

Due to the environmental complexity, in the autonomous ground vehicles domain, the following main challenges still remain:

- Sensor Fusion Complexity: Handling heterogeneous sensor data in urban environments.

- Uncertainty and Prediction: Managing unpredictable behaviours of pedestrians and other vehicles.

- Computation Load: Real-time inference on limited onboard computing resources.

- V2X Communication: Integration with smart infrastructure and other vehicles.

Marine Vehicle Architectures

Marine autonomous vehicles operate in harsh, unpredictable environments characterised by communication latency, limited GPS access, and energy constraints. They include AUVs (Autonomous Underwater Vehicles), ASVs (Autonomous Surface Vehicles) and ROVs (Remotely Operated Vehicles). These vehicles rely heavily on acoustic communication and inertial navigation, requiring architectures that can operate autonomously for long durations without human intervention ([32].

The reference architecture is based on the MOOS (Mission-Oriented Operating Suite) IvP architecture discussed previously. It provides interprocess communication and logging, while IvP Helm enables a decision-making engine using behaviour-based optimisation via IvP functions. The architecture supports distributed coordination (multi-vehicle missions) and robust low-bandwidth communication ([32]. The architecture is extensively used in NATO CMRE and MIT Marine Robotics research [33].

Comparative Analysis Across Domains

While the overall trend is to take advantage of modularity, abstraction and reuse, the are significant differences among the application domains.

| Aspect | Aerial | Ground | Marine |

|---|---|---|---|

| Primary Frameworks | PX4, ArduPilot, ROS 2 | Autoware, ROS 2, AUTOSAR | MOOS-IvP |

| Communication | MAVLink, RF, 4G/5G | Ethernet, V2X, CAN | Acoustic, Wi-Fi |

| Localization | GPS, IMU, Vision | GPS, LiDAR, HD Maps | DVL, IMU, Acoustic |

| Main Challenge | Real-time stability | Sensor fusion & safety | Navigation & communication delay |

| Safety Standard | DO-178C | ISO 26262 | IMCA Guidelines |

| Emerging Trend | Swarm autonomy | Edge AI | Cooperative fleets |

An important trend in recent years is the convergence of architectures across domains. Unified software platforms (e.g., ROS 2, DDS) now allow interoperability between aerial, ground, and marine systems, enabling multi-domain missions such as coordinated search-and-rescue (SAR) operations. The integration of AI, edge computing, and cloud-based digital twins has blurred domain boundaries, giving rise to heterogeneous fleets of autonomous agents working collaboratively. Aerial systems look after stability, lightweight real-time control, and airspace compliance; open stacks like PX4/ArduPilot show how flight-critical loops coexist with higher-level autonomy. Ground systems exploit dense, dynamic scenes, heavy sensor fusion, and functional safety; stacks like Autoware illustrate a full L4 pipeline from localisation to MPC-based control. Marine systems suffer from low-bandwidth communications, GPS-denied navigation, and long-endurance missions; MOOS-IvP’s shared-database and behaviour-arbitration approach fits these realities. Summarising, a successful autonomy is based on sound software architecture instead of any particular single algorithm. The developed frameworks provide practical blueprints that can be adapted, mixed, and extended to meet mission demands across air, land, and sea.

Domain-Specific Challenges in Autonomy

[rczyba][✓ rczyba, 2025-10-20]

Autonomous technologies and robotics are redefining possibilities, improving efficiency and safety across sectors. Advanced applications such as self-driving vehicles, crop and harvesting robots rely on precise GNSS/GPS positioning and require centimeter-level accuracy to function properly. As the domain of autonomous applications expands, the ability to use reliable, real-time GNSS/GPS correction services becomes not only useful, but essential. Mapping and localization algorithms, as well as sensor models, enable vehicle orientation even in unfamiliar environments. Route planning and optimization algorithms, as well as obstacle avoidance algorithms, enable vehicles to reach their destinations independently. The development of autonomous driving technology also involves the introduction of systems that enable communication between self-driving cars, as well as between the AVs and their surroundings. Autonomous driving technology is constantly evolving, and among the greatest challenges associated with developing fully functional self-driving cars is the dependence of individual sensors' performance on weather conditions.

A significant challenge is how to navigate autonomously by unmanned vehicles in environments with limited or no access to localization data. Autonomous navigation without GNSS is a complex and rapidly evolving technology area that has the potential to revolutionize many industries and applications. Key technologies and methods for navigation that lack GNSS information include inertial navigation systems (INS), vision-based localization, Lidar, and indoor localization systems. Promising results are also provided by SLAM technology, which is used to simultaneously determine the vehicle's position (location) and build a map of the environment in which it moves. Each of the technologies mentioned above has its advantages and disadvantages, but none of them provides a complete overview of the current state of the surrounding world. Although autonomous navigation technology without GNSS has many advantages, it also encounters challenges. These include, among others, difficulties in accurate measurement in an unknown or changing environment and problems with sensor calibration, which can lead to navigation errors.

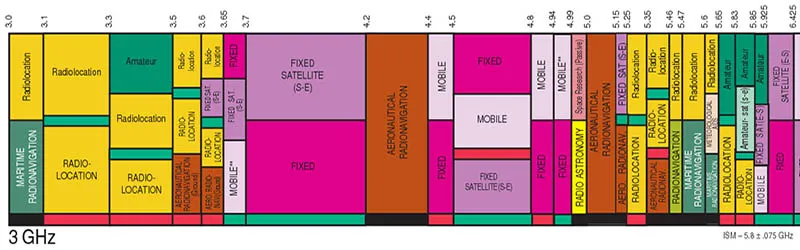

In recent decades, much research and technology has been developed for various autonomous systems, including airborne, ground-based, and naval systems (see Figure 11). Much of this technology has already reached maturity and can be implemented on unmanned platforms, while others are still in the research and development phase.

Domain-specific challenges in the autonomy of vehicles include several technical, safety, regulatory, and ethical issues unique to different operational environments. Key challenges are:

- Sensor Limitations and Perception

- Accurate object detection in diverse weather conditions (rain, fog, snow).

- Distinguishing between road users like pedestrians, cyclists, and animals.

- Handling sensor occlusions and blind spots.

- Complex and Dynamic Environments

- Navigating complex urban settings with unpredictable human behavior.

- Managing construction zones, roadworks, and unexpected obstacles.

- Adapting to high traffic density and erratic driver behaviors.

- Localization and Mapping

- Achieving precise real-time localization in GPS-denied environments (tunnels, urban canyons).

- Maintaining up-to-date high-definition maps amid road changes.

- Decision-Making and Planning

- Ensuring safe, compliant, and contextually appropriate decisions.

- Handling edge cases like jaywalking pedestrians or unusual vehicle maneuvers.

- Communication and Cybersecurity

- Secure vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communications.

- Protecting against hacking or malicious cyber-attacks.

- Regulatory and Legal Challenges

- Navigating diverse legal frameworks across regions.

- Defining liability in accidents involving autonomous vehicles.

- Achieving standardization and interoperability.

- Ethical Considerations

- Decision-making in unavoidable accident scenarios.

- Privacy concerns related to data collection and sharing.

- Infrastructure and Standardization

- Lack of uniform infrastructure to support vehicle-to-infrastructure communication.

- Variability in road signage, markings, and infrastructure standards.

- Testing, Validation, and Certification

- Developing comprehensive testing protocols for safety assurance.

- Validating autonomous systems in all possible scenarios.

- Human Factors and User Acceptance

- Ensuring passenger trust and understanding of autonomous system limits.

- Managing transition of control between human and automation.

Solving these domain-specific challenges is crucial for the safe and reliable deployment of autonomous vehicles in the various operational scenarios of our lives. In the following subchapters, specific challenges are determined taking into account the vehicle type and its operating environment.

Social acceptance

Building public trust in autonomous vehicles will be a huge challenge. In 2025, an online preference survey collected 235 responses from across Europe [35]. Respondents were asked whether they would feel comfortable traveling in an autonomous car. According to over half of the respondents, driving a driverless autonomous car would make them feel uncomfortable. Distrust of machines is primarily fueled by the prospect of losing control over one's fate, which is one of the fundamental philosophical issues in the context of artificial intelligence development. Therefore, it seems crucial for sociologists and psychologists to thoroughly examine this fear and develop a plan to educate the public. Furthermore, placing excessive reliance on automated systems delays drivers' reaction times in crisis situations and compromises their willingness to take manual control. Increased trust also leads to longer driver reaction times to roadside warnings.

Another considered advantage of introducing autonomous vehicles in public transport would be the reduction of fatal accidents. According to statistics, driver error contributes to 75–90% of all road accidents. Eliminating driver error could significantly reduce fatalities among drivers and passengers. However, critics of this approach point out that automation can only correct some human errors, not eliminate them entirely. However, forecasts regarding increasing access to this technology are optimistic. According to the EU Transport Commissioner, by 2030, roads in member states will be shared by cars with conditional automation and standard vehicles. The prospect of full automation is another 10–15 years away. The research performed using the autonomous Blees bus [36] constructed in Gliwice, Poland, confirms the results of the survey and allows us to look optimistically into the future.

Software-defined vehicle

Software-based vehicle (SV) is a term used to describe, among other things, cars whose parameters and functions are controlled by software. This is the result of the car's ongoing evolution from a purely mechanical product to a software-based electronic device (see Figure 13).

Today's premium vehicles can require up to 150 million lines of code distributed across over a hundred electronic control units, and utilize an ever-increasing number of sensors, cameras, radars, and lidar sensors. Lower-end cars have only slightly fewer. Three powerful trends—electrification, automation, and digitalization—are fundamentally changing customer expectations and forcing manufacturers to invest in software to meet them.

As driver assistance systems become automated and vehicles acquire autonomous driving capabilities, the demand for software also grows. The more sophisticated content consumers expect from their infotainment systems, the more digital content the vehicle must manage. And because such a vehicle, as part of the Internet of Things (IoT), transmits increasing amounts of data to and from the cloud, software is required to process and manage it.

Agility in software development

There are two basic software development methods: the traditional waterfall model and a newer approach called agile, which is key to the automotive industry's transformation toward “software-defined vehicles.”

In the waterfall approach, software development proceeds through distinct, sequential phases. These phases include requirements definition, implementation, integration, and testing. The waterfall method has numerous drawbacks: it is not flexible enough to keep up with the pace of change in today's automotive industry; it does not emphasize close collaboration and rapid feedback from internal business teams and external partners; and it does not ensure testing occurs early enough in the project. The ability to test complex software systems is particularly important in the automotive industry, where engineers conduct advanced simulations of real-world driving conditions—software-in-the-loop, hardware-in-the-loop, and vehicle-in-the-loop—before a vehicle hits the road.

The agile approach represents a cultural and procedural shift from the linear and sequential waterfall approach. Agile is iterative, collaborative, and incorporates frequent feedback loops. Organizations using agile form small teams to address specific, high-priority business requirements. Teams often work in a fixed, relatively short iterative cycle, called a Sprint. Within this single iteration, they produce a complete and tested increment of a software product based on specific business needs, ready for stakeholder review.

Both waterfall and agile approaches can utilize the V-model for software development, sequentially progressing through the design, implementation, and testing phases. The difference is that the waterfall approach uses the V-model as a single-pass process throughout the project, while the agile approach may implement the V-model within each Sprint.

Cybersecurity Challenges

Software-defined vehicles (SDVs) open up enormous opportunities, allowing end customers to enjoy a wide range of cutting-edge safety, comfort, and convenience features. Well-organized cybersecurity management must go hand in hand with the development of SDVs. Software developers must ensure security in every area, regardless of the security of a specific application.

Cybersecurity is a relatively new concept in the automotive industry [1]. While automakers began introducing electronically controlled steering and braking systems into their vehicles, the likelihood of threats increased, connectivity opened the door to significantly greater risks. Direct vehicle connections to the internet are cited as a source of cyber threats, but indirect connections, such as those to a mobile phone connected via USB or Bluetooth, are often overlooked. Even a vehicle that appears to have no connectivity at all can be equipped with a wireless tire pressure monitoring system or on-board diagnostic module that allows access to vehicle information.

Here are some key areas related to this topic:

- Secure updates: To ensure consumers have access to the most efficient features, today's vehicles download software updates wirelessly from the cloud, so these updates must be secure. Public key infrastructure (PKI) is a mechanism that allows manufacturers to digitally sign software so that the receiving system can verify its authenticity. Using a secret digital key, the manufacturer encrypts the software before releasing it. When the vehicle downloads the software, it uses another, publicly available key to verify the content. A complex algorithm ensures that only content signed with the secret key can be verified with the public key.

- Safe system boot: Manufacturers must also ensure secure system boot. Once the vehicle is started, the system must verify the authenticity and integrity of the software. This means the system must ensure that the code was created by the manufacturer and not by an attacker.

- Secure vehicle network: As vehicles become increasingly complex and software-defined, many applications running on them will use the same processors and networks to transmit data between different processing nodes. For example, some infotainment applications might require vehicle speed and navigation data, while other applications might need battery management information. Having an in-vehicle network and connecting to cloud networks via mobile network and Wi-Fi requires vehicles to secure these connections on multiple layers. The lowest layer is Media Access Control Security (MACsec), which establishes a bidirectional encrypted connection between two directly communicating devices. MACsec can operate extremely quickly, encrypting and decrypting information at wire speeds using specialized hardware. The next higher layer is Internet Protocol Security (IPsec), which operates at the network layer to authenticate and encrypt data packets between network nodes with IP addresses. Using IPsec can help protect data flowing across the network—through a router, to the cloud, and beyond—not just on the physical link between two points. Moving up the stack, manufacturers can use Transport Layer Security (TLS). This protocol operates at the network layer, where processes communicate without being bound to IP addresses, making the security mechanism more flexible. TLS is now widely used in internet communications, and vehicles should use it when connecting to the cloud.

Advanced connectivity

As autonomous driving becomes more common, implementing even higher levels of message encryption may become increasingly important. For example, a user could send a message to a vehicle requesting it be picked up from a specific address. This message would be cryptographically signed and delivered securely. New protocols can help with this, even involving multiple clouds if necessary. Furthermore, as automotive companies begin to hire more developers to build various software functions, ensuring no interference between applications becomes crucial. This is achieved by using hypervisors, containers, and other technologies to decouple software, even on shared hardware.

Radar technologies

Recent advances in vehicle radar technology will soon lead to a fundamental increase in radar capabilities, significantly enhancing the radar-centric approach to advanced driver assistance systems (ADAS).

There are two primary ways to improve ADAS system perception. We can either improve how the system interprets sensor data using machine learning, or improve the quality and accuracy of real-world sensor data. Combining these approaches creates a synergistic effect, creating a reliable model of the environment that vehicles can use to make intelligent driving decisions. While machine learning is constantly evolving, so is radar sensor technology. One specific technology is the use of 3D air-waveguide technology in radar antennas to capture more precise signals and increase range. In automotive radar systems, 3D air-waveguide antennas help efficiently scan the surrounding area with radar signals and receive weak echoes from the surrounding environment with low loss. By reducing losses in the transmitted and received signal, air-waveguide antennas enable the use of a more sensitive sensor while maintaining the same small physical radar footprint.

An alternative to self-parking

Radars with advanced 3D air-waveguide antenna technology could support a high-resolution perception mode, enabling self-parking. Early implementations of self-parking systems rely on ultrasonic sensors to measure the width of parking spaces. This often means the vehicle must drive past the space to determine if it is the right size before backing into it. With ambient perception software that utilizes improved radar, the vehicle would be able to determine the space's dimensions before driving past it, allowing it to park directly in it.

By utilizing advanced antenna technology, high-speed data transmission support, and enhanced software, Aptiv’s seventh-generation radar family provides an excellent foundation for building the next generation of automated driving vehicles.

At the same time, in the case of high-speed NOA radars, the detection range of the traditional radar is only 210 meters when traveling at high speed, while the detection range of the precise 4D radar reaches 280 meters (the parameters of some manufacturers even exceed 300 meters), which allows for earlier identification of targets, thereby solving the problem of the rigid requirement for forward perception.

Currently, brands such as Ideal, Changan, and Weilai are leading the widespread adoption of 4D radars. Among them, all Weilai NT3 platform models will be equipped with 4D radars as standard, with a maximum detection range of up to 370 meters. Furthermore, the three millimeter-wave distributed radar arrays in the Huawei Zunjie S800 can also utilize 4D technology.

Moreover, according to Huawei, the distributed architecture further improves performance. Its detection capabilities surpass those of existing 4D millimeter-wave radars, its high-confidence detection range in rainy and foggy conditions has been increased by 60%, and the detection latency of frontal and side targets has been reduced by 40%.

Definitions, Classification, and Levels of Autonomy

[rczyba][✓ rczyba, 2025-10-12]

Autonomy of unmanned systems refers to their ability to self-manage, make decisions, and complete tasks with minimal or no human intervention. The scope of autonomy ranges from zero to full capability, often defined through models, and encompasses four fundamental functions: perception, orientation, problem-solving (planning), and action. Advances in autonomy enable unmanned systems to learn, adapt to changing environmental conditions, and perform complex tasks, driving innovation in various fields.

Levels of Ground Vehicle Autonomy

There are several ways to classify autonomy levels based on various criteria. In 2014, the American organization Society of Automotive Engineers (SAE) International adopted a classification of six levels of autonomous driving, which was subsequently modified in 2016. Based on a decision by the National Highway Traffic Safety Administration (NHTSA), this is the officially applicable standardization in the United States, which is also the most popular in studies on autonomous driving technologies in Europe.

To clarify the situation, SAE International has defined 5 levels of automation for autonomous vehicles, which have been adopted as an industry standard (see Figure 17).

- Level 0: The driver has full control of the vehicle and there are no automated systems.

- Level 1: Also known as “hands-on,” the driver controls all standard driving functions, such as steering, acceleration, braking, and parking. Some automated systems, such as cruise control, parking assist, and lane-keeping assist, will be built into the car. The driver must monitor their surroundings and be able to take full control at any time.

- Level 2: “Hands-free” automation means that the automated system can take full control of the vehicle, steering, accelerating, and braking. However, the driver must be ready to take control of the vehicle if necessary. The “hands-free” principle shouldn't be taken literally, and the SAE recommends that the hands remain in contact with the steering wheel to confirm that the driver is ready to take control.

- Level 3: Level 3 is referred to as “without looking into the eyes” automation. The driver can focus on activities other than driving, such as using a phone or watching a movie. The automated system will be able to respond to situations requiring immediate action, such as emergency braking, but the driver will still need to intervene if notified by the technology.

- Level 4: The next level is “mind-off” automation. It's essentially similar to Level 3 in that the driver doesn't need to monitor their surroundings. In fact, they can fall asleep, as driver intervention isn't required, even in emergency situations. However, this level of autonomy is only supported in limited areas or under specific circumstances, such as traffic jams.

- Level 5: Level 5 means “steering wheel optional.” The car is fully autonomous and requires no human intervention.

Levels of Drone Autonomy

In general, autonomy or autonomous capability is defined in the context of decision-making or self-governance within a system. According to the Aerospace Technology Institute (ATI), autonomous systems can essentially decide independently how to achieve mission objectives, without human intervention [39]. These systems are also capable of learning and adapting to changing operating environment conditions. However, autonomy may depend on the design, functions, and specifics of the mission or system [40]. Autonomy can be broadly viewed as a spectrum of capabilities, from zero autonomy to full autonomy. The Pilot Authorization and Task Control (PACT) model assigns authorization levels, from level 0 (full pilot authority) to level 5 (full system autonomy), also used in the automotive industry for autonomous vehicles (see Figure 18).

Levels of autonomy in drone technology are typically divided into five distinct levels, each representing a gradual increase in the drone's ability to operate independently.

- Level 0: The pilot has complete control over every movement. The platforms are always 100% manually controlled.

- Level 1 – Remote Control: The pilot retains control of overall operations and vehicle safety. However, the drone can take over one or more essential functions for a specified period. While the pilot does not have continuous control of the vehicle and never simultaneously controls speed and direction, it can assist with navigation and/or maintain altitude and position. The drone is supported by GNSS for flight stabilization, and all inputs regarding direction, altitude, and speed are entered manually. Obstacle detection functions are available at this level, but avoidance is performed manually by the pilot.

- Level 2 - Automated Flight Control (Assisted Autonomy): A pre-programmed flight path is transmitted to the autopilot, and the drone begins its mission, flying along waypoints. The pilot is still responsible for the safe control of the vehicle and must be ready to take control of the drone if an unexpected event occurs. However, under certain conditions, the drone itself can take control of the drone’s heading, altitude, and speed. The pilot still has full control, including monitoring airspace, flight conditions, and responding to emergencies. Most manufacturers currently build drones at this level, where the platform can assist with navigation functions and allow the pilot to disengage from certain tasks.

- Level 3 - Partial Autonomy (Semi-Autonomous): Similar to Level 2, the drone can fly autonomously, but the pilot must be alert and ready to take control at any time. The drone notifies the pilot of the need for intervention, acting as an emergency system. This level means that the drone can perform all functions “under certain conditions.”

- Level 4 - Cognitive Autonomy (Advanced Semi-Autonomous): A drone can be controlled by a human, but it doesn't always have to be. Under the right conditions, it can fly autonomously at all times. It's expected that the drone will have redundant systems so that if one system fails, it will continue to operate. At this level of autonomy, the “sense and avoid” function becomes “sense and navigate.” This means that the drone detects obstacles along its flight path and actively avoids contact by changing its flight trajectory.

- Level 5 – Full Autonomy: The drone controls itself independently in any situation, without the need for human intervention. This includes full automation of all flight tasks in all conditions. Currently, such drones do not yet exist. However, it is expected that in the near future, they will be able to utilize artificial intelligence tools for flight planning—in other words, autonomous learning systems with the ability to modify routine behavior.

Another general but useful model describing autonomy levels in unmanned systems is the Autonomy Levels for Unmanned Systems (ALFUS) model [42]. European Union Aviation Safety Agency (EASA), in one of its technical reports, provided some information on autonomy levels and guidelines for human-autonomy interactions. According to EASA, the concept of autonomy, its levels, and human-autonomous system interactions are not established and remain actively discussed in various areas (including aviation), as there is currently no common understanding of these terms [43]. Since these concepts are still somewhat developmental, this becomes a huge challenge for the unmanned aircraft regulatory environment as they remain largely unestablished.

The classification of autonomy levels in multi-drone systems is somewhat different. In multi-drone systems, several drones cooperate to perform a specific task. Designing multi-drone systems requires that individual drones have an increased level of autonomy. The classification of autonomy levels is directly related to the division into flights performed within the pilot's or observer's line of sight (VLOS) and flights performed beyond the pilot's line of sight (BVLOS), where particular attention is paid to flight safety. One way to address the autonomy issue is to classify the autonomy of drones and multi-drone systems into levels related to the hierarchy of tasks performed [44]. These levels will have standard definitions and protocols that will guide technology development and regulatory oversight. For single-drone autonomy models, two distinct levels are proposed: the vehicle control layer (Level 1) and the mission control layer (Level 2), see Figure 20. Multi-drone systems, on the other hand, have three levels: single-vehicle control (Level 1), multi-vehicle control (Level 2), and mission control (Level 3). In this hierarchical structure, Level 3 has the lowest priority and can be overridden by Levels 2 or 1.

Legal, Ethical, and Regulatory Frameworks

[rahulrazdan][✓ rczyba, 2025-10-12]

In society, products operate within the confines of a legal governance structure. The legal governance structure is one of the great inventions of civilization and its primary role is to funnel disputes from unstructured expression and perhaps even violence to the domain of courts (figure 1). To be effective, legal governance structures must be perceived as fair and predictable. The objective of fairness is obtained by a number of methods such as due process procedures, transparency and public proceedings, and Neutral decision-makers (judges, juries, arbitrators). The objective of predictability is achieved by the use of the concept of precedence. Precedence is the idea that past rulings are given heavier weight relative to decision making, and it is an extraordinary event to diverge from precedence. Precedence gives the legal system stability. The combination of fairness and predictability shifts the dispensation of disputes to a more orderly process which promotes societal stability.

How does this mechanically work and how does this connect to product development ?

As shown in figure 2, there are three major stages. First, legal frameworks are established by law-making bodies (legislators). However, in practice, legislators cannot specify all aspects and empower administrative entities (regulators) to codify the details of law. Finally, regulators often do not have the technical knowledge to codify all aspects of the law and rely on independent industry groups such as Society for Automotive Engineering (SAE) or Institute of Electrical and Electronics Engineers (IEEE) for technical knowledge. Second, in the field, disputes arise and must be adjudicated by the legal system. The typical process is a trial, under the strict processes established for fairness. The result of the trial is to apply the facts to the legal frameworks and apply a judgement. The facts of the case can result in three potential outcomes. In the first situation, the facts are covered by the legal framework, so there is no further action relative to the governance structure. In the second case, the facts expose an “edge” condition in the governance structure. In this situation, the court looks for previous cases which might fit (the concept of precedence) and uses that to make its judgement. If such a case does not exist, the court can establish precedence with its judgement in this case. This has the effect of weighing the future decisions as well. Finally, in rare situations, the facts of the case are in a field which is so new that there is not much in the way of body of law. In these situation, the courts may make a judgement, but often there is a call for law-making bodies to establish deeper legal frameworks.

In fact, autonomous vehicles (AVs) are considered to be one of these situations. Why ? In traditional automobiles, the body of law connected to product liability is connected to the car, and the liability of actions using the car is connected to the driver. Further, Product liability is often managed at the federal level and driver licensing more locally. However, surprisingly, as the figure below shows, there is a body of law dealing with autonomous vehicles from the distant past. In the days of horses, there were accidents, and a sophisticated liability structure emerged. In this structure, there was a concept that if a person directed his horse into an accident, then the driver was at fault. However, if a bystander did something to “spook” the horse, it was the bystander's fault. Finally, there was also the concept of “no-fault” when a horse unexpectedly went rogue. A discerning reader may well understand that this body of law emerges from a deep understanding of the characteristics of a horse. In legal terms, it creates an “expectation.' What are the “expectations” for a modern autonomous vehicle ? This is currently a highly debated point in the industry.

Overall, whatever value products provide to their consumers is weighed against the potential harm caused by the product, and leads to the concept of legal product liability. While laws diverge across various geographies, the fundamental tenets have key elements of expectation and harm. Expectation as judged by “reasonable behavior given a totality of the facts” attaches liability. As an example, the clear expectation is that if you stand in front of a train, it cannot stop instantly while this is not the expectation for most autonomous driving situations. Harm is another key concept where AI recommendation systems for movies are not held to the same standards as autonomous vehicles. The governance framework for liability is mechanically developed through legislative actions and associated regulations. The framework is tested in the court system under the particular circumstances or facts of the case. To provide stability to the system, the database of cases and decisions are viewed as a whole under the concept of precedence. Clarification on legal points is set by the appellate legal system where arguments on the application of the law are decided what sets precedence.

What is an example of this whole situation ? Consider the airborne space with the figure above where the governance framework consists of enacted law (in this case US) with associated cases providing legal precedence, regulations, and industry standards. Any product in the airborne sector, must be compliant to release their solution to the marketplace.

Ref:

- Razdan, R., (2019) “Unsettled Technology Areas in Autonomous Vehicle Test and Validation,” Jun. 12, 2019, EPR2019001.

- Razdan, R., (2019) “Unsettled Topics Concerning Automated Driving Systems and the Transportation Ecosystem,” Nov 5, 2019, EPR2019005.

- Ross, K. Product Liability Law and its effect on product safety. In Compliance Magazine 2023, [Online]. Available: https://incompliancemag.com/product-liability-law-and-its-effect-on-product-safety/

Introduction to Validation and Verification in Autonomy

[rahulrazdan][✓ rahulrazdan, 2025-06-16]

As discussed in the governance module, whatever value products provide to their consumers is weighed against the potential harm caused by the product, and leads to the concept of legal product liability. From a product development perspective, the combination of laws, regulations, legal precedence form the overriding governance framework around which the system specification must be constructed [3]. The process of validation ensures that a product design meets the user's needs and requirements, and verification ensures that the product is built correctly according to design specifications.

Fig. 1. V&V and Governance Framework. The Master V&V(MaVV) process needs to demonstrate that the product has been reasonably tested given the reasonable expectation of causing harm. It does so using three important concepts [4]:

- Operational Design Domain (ODD): This defines the environmental conditions and operational model under which the product is designed to work.

- Coverage: This defines the completeness over the ODD to which the product has been validated.

- Field Response: When failures do occur, the procedures used to correct product design shortcomings to prevent future harm.

As figure 1 shows, the Verification & Validation (V&V) process is the key input into the governance structure which attaches liability, and per the governance structure, each of the elements must show “reasonable due diligence.” An example of unreasonable ODD would be for an autonomous vehicle to give up control a millisecond before an accident.

Mechanically, MaVV is implemented with a Minor V&V (MiVV) process consisting of:

- Test Generation: From the allowed ODD, test scenarios are generated.

- Execution: This test is “executed” on the product under development. Mathematically, a functional transformation which produces results.

- Criteria for Correctness: The results of the execution are evaluated for success or failure with a crisp criteria-for-correctness.

In practice, each of these steps can have quite a bit of complexity and associated cost. Since the ODD can be a very wide state space, intelligently and efficiently generating the stimulus is critical. Typically, in the beginning, stimulus generation is done manually, but this quickly fails the efficiency test in terms of scaling. In virtual execution environments, pseudo-random directed methods are used to accelerate this process. In limited situations, symbolic or formal methods can be used to mathematically carry large state spaces through the whole design execution phase. Symbolic methods have the advantage of completeness but face algorithmic computational explosion issues as many of the operations are NP-Complete algorithms.

The execution stage can be done physically (such as test track above), but this process is expensive, slow, has limited controllability and observability, and in safety critical situations, potentially dangerous. In contrast, virtual methods have the advantage of cost, speed, ultimate controllability and observability, and no safety issues. The virtual methods also have the great advantage of performing the V&V task well before the physical product is constructed. This leads to the classic V chart shown in figure 1. However, since virtual methods are a model of reality, they introduce inaccuracy into the testing domain while physical methods are accurate by definition. Finally, one can intermix virtual and physical methods with concepts such as Software-in-loop or Hardware-in-loop. The observable results of the stimulus generation are captured to determine correctness. Correctness is typically defined by either a golden model or an anti-model. The golden model, typically virtual, offers an independently verified model whose results can be compared to the product under test. Even in this situation, there is typically a divergence between the abstraction level of the golden model and the product which must be managed. Golden model methods are often used in computer architectures (ex ARM, RISCV). The anti-model situation consists of error states which the product cannot enter, and thus the correct behavior is the state space outside of the error states. An example might be in the autonomous vehicle space where an error state might be an accident or violation of any number of other constraints. The MaVV consists of building a database of the various explorations of the ODD state space, and from that building an argument for completeness. The argument typically takes the nature of a probabilistic analysis. After the product is in the field, field returns are diagnosed, and one must always ask the question: Why did not my original process catch this issue? Once found, the test methodology is updated to prevent issues with fixes going forward. The V&V process is critical in building a product which meets customer expectations and documents the need for “reasonable” due diligence for the purposes of product liability in the governance framework.

Finally, the product development process is typically focused on defining an ODD and validating against that situation. However, in modern times, an additional concern is that of adversarial attacks (cybersecurity). In this situation, an adversary wants to high jack the system for nefarious intent. In this situation, the product owner must not only validate against the ODD, but also detect when the system is operating outside the ODD. After detection, the best case scenario is to safely redirect the system to the ODD space. The risk associated with cybersecurity issues typically split at three levels for cyber-physical systems:

- OTA Security: If an adversary can take manipulate the Over the Air (OTA) software updates, they can take over mass number of devices quickly. An example worst case situation would be a Tesla OTA which turns Tesla's into collision engines.

- Remote Control Security: If the adversary can take over a car remotely, they can cause harm to the occupants as well as third-parties.

- Sensor Spoofing: In this situation, the adversary uses local physical assets to fool the sensors of the target. GPS jamming or spoofing are active examples.

In terms of governance, some reasonable due-diligence is expected to be provided by the product developer in order to minimize these issues. The level of validation required is dynamic in nature and connected to the norm in the industry.

Validation Requirements across Domains

[rahulrazdan][✓ raivo.sell, 2025-09-18]

In terms of domains, the Operational Design Domain (ODD) is the driving factor, and typically have two dimensions. The first is the operational model and the second is the physical domain (ground, airborne, marine, space). In terms of ground, Passenger AVs are perhaps the most well-known face of autonomy, with robo-taxi services and self-driving consumer vehicles gradually entering urban environments. Companies like Waymo, Cruise, and Tesla have taken different approaches to ODDs. Waymo’s fully driverless cars operate in sunny, geo-fenced suburbs of Phoenix with detailed mapping and remote supervision. Cruise began service in San Francisco, originally operating only at night to reduce complexity. Tesla’s Full Self Driving (FSD) Beta aims for broader generalization, but it still relies heavily on driver supervision and is limited by weather and visibility challenges.

Transit shuttles, though less publicized, have quietly become a practical application of AVs in controlled environments. These low-speed vehicles typically operate in geo-fenced areas such as university campuses, airports, or business parks. Companies like Navya, Beep, and EasyMile deploy shuttles that follow fixed routes and schedules, interacting minimally with complex traffic scenarios. Their ODDs are tightly defined: they may not operate in rain or snow, often run only during daylight, and avoid high-speed or mixed-traffic conditions. In many cases, a remote operator monitors operations or is available to intervene if needed. Delivery robots represent a third class of autonomous mobility—compact, lightweight vehicles designed for last-mile delivery. Their ODDs are perhaps the narrowest, but that’s by design. These robots, from companies like Starship, Kiwibot, and Nuro, navigate sidewalks, crosswalks, and short street segments in suburban or campus environments. They operate at pedestrian speeds (typically under 10 mph), carry small payloads, and avoid extreme weather, high traffic, or unstructured terrain. Because they don’t carry passengers, safety thresholds and regulatory oversight can differ significantly.

Weather is a particularly limiting factor across all autonomous systems. Rain, snow, fog, and glare interfere with LIDAR, radar, and camera performance—especially for smaller robots that operate close to the ground. Most AV deployments today restrict operations to fair-weather conditions. This is especially true for delivery robots and transit shuttles, which often halt operations during storms. While advanced sensor fusion and predictive modeling promise improvements, true all-weather autonomy remains a significant technical challenge. The intersection of weather and autonomy is an active research area [1]

Another ODD dimension is time of day. Nighttime operation brings unique difficulties for AVs: reduced visibility, increased pedestrian unpredictability, and in urban areas, more erratic driver behavior. Some systems (like Waymo in Chandler, AZ) now operate 24/7, but most deployments—particularly delivery robots and shuttles—remain restricted to daylight hours. Tesla's FSD does operate at night, but it still requires human oversight. Infrastructure also shapes ODDs in crucial ways. Many AV systems depend on high-definition maps, lane-level GPS, and even smart traffic signals to guide their decisions. In geo-fenced environments—where the route and surroundings are highly predictable—this infrastructure dependency is manageable. But for broader ODDs, where environments may change frequently or lack digital maps, achieving safe autonomy becomes much harder. That’s why passenger AVs today generally avoid rural areas, unpaved roads, or newly constructed zones.

Regulatory environments further shape ODDs. In the U.S., states like California, Arizona, and Florida have developed AV testing frameworks, but each differs in what it permits. For instance, California limits fully driverless vehicles to certain urban zones with strict reporting requirements. Delivery robots are often regulated at the city level—some cities allow sidewalk bots, others ban them outright. Transit shuttles often receive special permits for low-speed operation on limited routes. These regulatory boundaries translate directly into ODD constraints.

In terms of physical domains, Ground-based autonomous systems, especially in automotive contexts, are the most commercially visible. Self-driving vehicles operate in human-dense environments, requiring perception systems to identify pedestrians, cyclists, vehicles, and traffic infrastructure. Validation here relies heavily on scenario-based testing, simulation, and controlled pilot deployments. Standards like ISO 26262 (functional safety), ISO/PAS 21448 (SOTIF), and UL 4600 (autonomy system safety) guide safety assurance. Regulatory frameworks are evolving state-by-state or country-by-country, with Operational Design Domain (ODD) restrictions acting as practical constraints on deployment.